Using UAS technology to collect and process volumetric data is one of the biggest advancements and arguments for using UAS. The ability to fly over an area, take a series of images and then process that area is not only efficient but accurate when using the correct methods. There are a number of figures that can be calculated including volume, surface area, elevation levels, etc. For the purpose of this lab volume is what is being focused on. There are a number of reasons a company may want volumetric data. Knowing the volume of a given area can be very important for the purpose of logistics. When dealing with mines it is important to know how much material is in a given area for calculating costs for processing the material and figuring out the amount of trucks or train cars required to move that amount of material. It is also crucial in scenarios where contractors are paid on a material moved basis. Often times these kinds of calculations are made every month to monitor efficiency and the overall level of product moved. Due to the fact these measurements are often done on a frequent basis there is a recurring cost each time, with most methods this cost is significant. There are a number of methods used to calculate the data including the use of a surveying team, LIDAR and also UAS. Doing volumetric calculations with a UAS is not only cost effective but also worker safety is improved because in areas such as open pit mines there is no longer a need for a surveying crew to walk around risking injury. Calculating volumetric data using a UAS can be done through several methods and programs. Perhaps the quickest and most straightforward method being Pix4D but ArcMap along with a set of tools can be used to also calculate volumes.

Methods:

Volume from Raster

Using ArcMap there are a couple of methods used to calculate volumetric data. The first being the use of 3D analyst to calculate the volume of a raster clip. To do so requires a number of tools and steps. The first step being creating a polygon feature class. When doing volumetric calculations it is important to focus on just the area you want to gather the data on. For this instance, volumetric data was calculated on three separate "piles" of material. When creating a feature class for each pile it is important to include just the intended pile, if the polygon includes other piles or the intended pile is cut off, the data cannot be considered accurate. Once the piles are defined (Figure 1) it is time to "Extract by Mask" this is essentially used to eliminate all the rest of the area surrounding the piles as it is not required for calculating the data. Once each feature is extracted only the intended areas will be left (Figure 2).

|

| Figure 1 - Masked polygons at Litchfield Mine site. |

|

| Figure 2 - Extracted Piles |

Once you have the piles separated the next step is taking note of the elevation by using the "Identify" tool (Figure 3). This step is crucial as the elevation for each pile area is needed for the next tool.

|

| Figure 3 - Identify Tool Symbol |

|

| Figure 4 - Surface Volume tool |

The model below (Figure 5) shows the entire process needed to calculate volume using a raster.

|

| Figure 5 - Volume from a raster model |

Volume from TIN

The next method of calculating volume is using ArcMap and using a TIN. TIN stands for Triangulated Irregular Network. The first step is taking the raster clips created in the previous method and converting those using the "Raster to TIN" tool (Figure 6). A TIN contains better surface definition because of the use of triangulations. (Figure 7) Once the tool finishes, the next step is using the "Add Surface Information" tool (Figure 8) on each TIN, specifically adding the "Z_Mean" value. This tool is useful because in general, elevation values are usually ignored in ArcMap unless requested by using tools such as this.

|

| Figure 6 - Raster to TIN tool |

|

| Figure 7 - Image featuring converted TINs |

|

| Figure 8 - Add Surface Information tool |

The last step in calculating the volume is using the "Polygon Volume" tool. The reason "Surface Volume" tool isn't used is because it isn't designed for TIN features and "Polygon Volume" (Figure 9) takes the average surface elevation added using the "Add Surface Information" tool. The volume can now be found once clicking the pile using the "Identify" tool.

|

| Figure 9 - Polygon Volume tool |

The model below (Figure 10) shows the entire process needed to calculate volume using a TIN.

|

| Figure 10 - Complete TIN volume model |

Volume from Pix4D

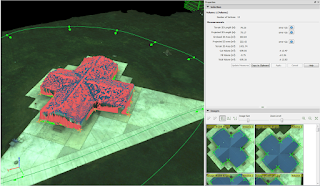

The final method explored in this lab is the use of a program that had been used in previous labs but specifically back in Activity 7 where the volume of a pavilion was found.

The process for calculating volume in Pix4D is extremely simple. At the top menu bar there is a "Measure" area, from there select volume. Using the tool, select points around the base of the area or in this case, pile, until the area is completely enclosed like in (Figure 11). Once complete, click "Calculate Values" and the volume of your selected area will be presented.

|

| Figure 11 - Calculating Volume in Pix4D |

Results

Looking at the table (Figure 10) there are some differences in results. The raster volume compared to the Pix4D volume results are closest. It makes sense the raster volumes are the highest because while doing this method, volume from the surrounding area may end up included in the result. In essence, volume is overestimated slightly.

The TIN volumes are way off and it makes sense given the default values used. When calculating the TIN, the default value of 1,500,000 maximum points was selected. This makes the process relatively quick but as the table shows, much less accurate. If a value of 15,000,000 or even 25,000,000 points was selected the data would be much more accurate but take longer to calculate. With 1,5000,000 the polygon was very simple and not detailed like the actual pile would be.

As far as accuracy goes, doing the raster method or Pix4D method will produce the best results. If time is an issue, Pix4D is a clear winner. Although there aren't as many steps to the Raster method compared to the TIN method the Raster method took longer to process but produced more accurate results. Doing the Pix4D methods seems like the best overall way. The sheer level of simplicity really highlights how powerful the program is and is just one of many tasks it can handle in an efficient matter.

Conclusion

When using UAS to calculate volumes there are many methods possible. Picking the right one really depends on the project at hand and what resources are at your disposal. As with most data processing, the higher specifications your computer has the faster and more efficient the data will be processed. Not every company has Pix4D available, in that case, programs such as ArcMap can be used to supplement and still get accurate results. In a professional setting where money is being exchanged and accuracy is key it is important to be diligent and triple check results to make sure both parties are happy with the results. In the case of open pit mines, using UAS is a perfect method. UAS in a mining situation is efficient, cost effective, improves safety, and accurate.